I'm sharing about how I missed to test for functionality while I was immerse focused on testing for performance of a Stored Procedure. I was unhappy for a couple of days as I missed something that I practiced for years.

I'm glad for reinforcing this learning with much more awareness into my testing's

MVT and MVQT, now.

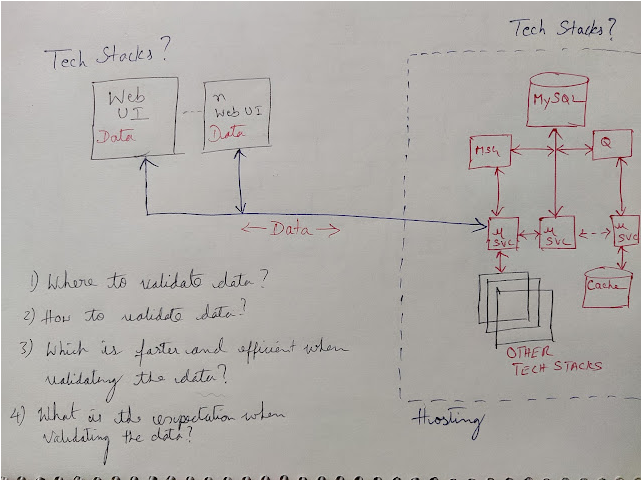

Context of Testing

A Stored Procedure was optimized for better execution time. No change in the functionality. This part of the system is not touched for a long time (years?). There was no change in functionality here for long time (years?). The time taken by SP was of concern. I was asked to test for the optimization.

The complicated area, here, is the test data to use. It took me days, for identifying and building the test data to test this optimization by mimicking the production incidents, use cases, and data.

When I got the test data ready, it was the fourth day of my testing this change.

Where Did I Go Blind By Being Focused?

The test data that I prepared is solely for the evaluation of the execution time. This test data helped to test functionality as well. But, my focus was on evaluating performance not functionality from this test data.

The change in SP did impact the functionality. I was supposed to use the large data range to test for functionality of this feature which includes two SPs. But, the task assigned was to test just one SP which is optimized. I got blind here!

Are you asking, what is the impact of this functional problem?

- In the one complete business work flow, this functional problem added the same data into different sets in the subsequent iterations. Redundant Data -- This is not an expected behavior.

I just spoke performance, traces, data I/O and execution time, because that was a pressing problem. Why? That was the objective given to me.

My testing mission fell short in redefining this objective. If I had redefined it, I would, have added functionality in the better scale.

If I had redefined it, I would have pulled the other SP into functional testing which is also part of this feature's work flow. These two SPs are expected to handle the data by eliminating the redundancy.

It was a simple test, but, I did not include/had that in my testing mission that day.

Why Did I Go Blind?

The performance test blinded me for functionality, as I saw the basic functional flow looked functioning. But, the data count was going wrong when a bigger data range is used in the context.

See here, how stupid I was in my testing! I'm testing for a SP that has a change as part of its optimization for execution time. I never brought the functional testing in. Why? I focused on the testing objective.

I just looked into one SP that is optimized. I did not look the other SP which has to work along with this SP later to complete functional flow of the feature. Why? How is that even possible? I was asking myself this. I see, this is okay from the perspective of the testing objective I had. But, not okay from the perspective of a test engineer who is supposed to think the impact and prevent the problems.

My immersed and concentrated focus on performance and its related activities on a SP for four long days did not let me see this.

What Am I Saying Here?

While I have tested for DBs and ETL systems for years, I did not use my learning here. What is that learning?

When there is a change in any part of the ETL, SP or DB of a system, testing for the functionality for the business workflow is equally important. Vary the data dimensions and evaluate the counts.

I was completely hooked into the execution time and the test data while switching between the environments for four days. The chaos in data between environments is something that misleads easily. I fell to it this time.

I say to myself, if it is a fix for the performance optimization or a security [or any quality criteria], testing for functionality is equally important and of priority as running the tests for performance or security.

When a DB layer is picked for fixing and optimization, testing for functionality in a equal scale is must. There is a change in the code or/and infrastructure and it has to be noted with additional attention.

To add on this, this time, I did not go through and analyze the SP. I took this call from the test team. This call of me costed and had a major part in letting me not to think of functionality.

My fellow colleague ran a test with varying data size by completing the business workflow and observed the problem, and informed me. I give the credit to Sandeep.

If I had brought this performance test under the automation, I would not have done this. Why? I will evaluate and assert for each data returned for different sizes. I did not automate here and there was no need for it in this context.

Redefine the testing objective that you have got; it helps when you see the model of a system and test.

Respect all the fix and suspect all the fix. This helps in a longer run!