I read a LinkedIn post yesterday. This post asks for the appropriate contextual message to deal and how to continue upon what is shown to the user. I see it is fair and straight. I expect the same.

Thanks Liudas Jankauskas for writing this LinkedIn post and sharing your experience.

What surprised me is why the discussion was not taken forward in the post. As a result, we did not seed the mindset and attitude of Test Engineering and Prevention discussion. Testing does not prevent; but, the outcome of the test will persuade the prevention efforts and culture.

Okay! If you see this post is too long to read, then here is in short to you -- This is not a API problem. The API has worked seamlessly and it has done what it is supposed to do. The code 201 returned is right and it is supposed to be there. Here, the 201 is not a HTTP Status Code. It is 3DS Error Response code.

Then, whose problem it is? To know that, read the entire blog post. You will thank me and yourself!

I Say This, Before I Start

- I'm writing this post as an interpretation and analysis for the JSON shared in the LinkedIn post.

- There is no intention of pointing to anyone.

- The intention is to share -- how to analyze and have perspectives to analyze when I [a test engineer] experience it.

- This post can become a reference to someone who is serious about Test Engineering and Prevention, and Testing.

- The intention of this post is to let know,

- How to analyze such incidents in the payment context?

- Is this a API problem? What behaviors should be classified as an API problem?

- Which component and layer is supposed to handle it? How and why?

- Which other components and layer is supposed to assist to handle it?

- How to see and interpret the HTTP and its status code in a narrowed cases?

- It is not always the API problem!

- I'm open to correct myself, unlearn, learn and update this blog post, when shared why I'm not making sense with I have written here.

- I will be thankful and humble to you for helping me to correct myself. 🙏

- Be comfortable and do connect and help me if you see I need it.

The Payment Failed; Could Not Buy Ferry Ticket

The user wanted to buy the ferry tickets by making the online payment. The payment did not happen and could not buy the ferry ticket.

Instead, the user sees the JSON on the UI.

The JSON has error description which reads as -- "TdsServerTransID is not received in Cres."

To understand what is TdsServerTransID and Cres, it is required to understand the payment gateway flows. Continue reading the next sections to know them.

How It Is Layered and Works?

Before I get into analysis and say where the problem is, this context requires an understanding of the payment gateway flow. Having this understanding, it helps to know how it could have been handled and prevented.

Refer to this below pic. It gives the sequences of interactions in the payment transaction. Note that, the below pic is not complete. It is kept to what is needed to the context of this blog post.

|

Transaction between 3DS Server and Bank for the Challenge through

POST request. It is shown as CReq and CRes. |

The Sequence of Interactions

- I initiate the payment on merchant website or app to buy the ferry ticket.

- The merchant creates a transaction id for tracking. Then, it hands over the rest to payment gateway.

- The payment gateway uses the 3DS way to carry this transaction.

- The 3DS initiates the Authentication Request (AReq) which will pass through the Directory Server.

- The Directory Server reads the data in the request and forwards it to the right bank who have issued the card [or account] used in the payment.

- The bank receives the AReq.

- The bank decides should it give a challenge to the user to make the payment or just agree with the data passed in AReq.

- Now, in this case the bank has decided to give the challenge to the user.

- The bank lets know the 3DS server through the response ARes.

- The challenge usually is to enter the OTP received over a SMS and authenticate. I presume the same in this case.

- The user enters the OTP and POSTS the request. Let us call this request as CReq. The CReq is sent from from the user's browser or app to the bank. The user interface on the browser or app at this point is handled by the payment gateway and not the merchant's web or app where the ticket is being brought.

- tdsServerTransactionId

- messageVersion

- messageType

- challengeWindowSize

- ascTransactionId

- Note that, the CReq having the tdsServerTransactionId which is generated by the payment gateway. tdsServerTransactionId is required in the CReq and expected by the bank.

- On receiving, the CReq, the bank processes the request. The bank responds back to the 3DS server; let us call it CRes.

- The CRes will have,

- tdsServerTransactionId

- acsTransactionId

- challengeCompletionId

- transStatus

- messageType

- messageVersion

- If all goes as expected, the authorization will be given for the payment.

- If not, the bank responds to the payment gateway (3DS) that there is a problem and the message.

- It is the payment gateway which has to read this problem and message.

- The payment gateway has to give the better contextual information through its interface to the merchant who is consuming the service.

- This is key!

- Hope you found the spot here!

The Code 201

Maybe, we test engineers presume the error code 201 returned is HTTP Status Code. Ask your team what is this code and what is the purpose of returning in the JSON.

Back to 201, here. It is not a HTTP Status Code. It is neither a custom developed error code by payment gateway nor merchant who is selling the ferry ticket.

This is a payment domain specific code used in the payment technology. Yeah! The code to tell the payment gateway and merchant what happened with the initiated payment transaction.

To be particular and more specific to the context, the 201 here is a 3DS APIs response code.

|

The 3DS Error Code and its Description.

The credit of this image is to developer.ravelin.com |

The above image is shared here so that you know the error codes are available in the payment context. I'm aware of 3DS protocol hence I could relate and understand the error code 201. Refer to the References section at the end of this blog post.

The payment gateway has to read this error code. Then provide the right contextual message and instruction to merchant saying what to do incase of payment failure.

Here is the catch. Not all merchant develops a mechanism to handle this. Instead, it is left to payment gateway service provider and depend on it.

Do the merchant bother about handling it at their end?

- As a merchant, I can switch to another payment gateway tomorrow.

- Why should I invest in building, developing and maintaining this as a part of my business when I'm paying another business to do it for me?

- Isn't that senseful business question or decision?

Now, you tell me who is expected to handle this 201 error? Who should let me know on the merchant web user interface what to do?

The Case and My Initial Observations

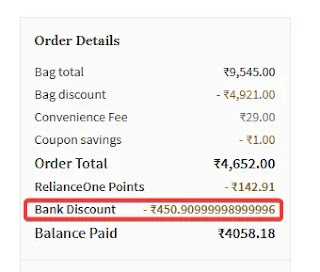

On submitting the challenge given by the bank, the below details is shown to the user.

|

Pic used from the post of Liudas.

It is the CRes returned from Bank to 3DS Server at Payment Gateway. |

My Observations

- Looks like the ticket being bought and payment made was in Turkey.

- The payment gateway involved to complete the payment transaction is Payten.

- The user is tryin to buy the ferry tickets and is making online payment. This error is seen on submitting the challenge given by the bank,

- Looks like the card is used for the payment.

- In the LinkedIn post, I read, multiple attempts were made to make payment, and the same message is seen.

- The Error Code returned is 201.

- Which means, a mandatory field is missing and it is a violation.

- Error Component is S, which probably means 3DS server.

- The component that raised the error.

- There is an error which is not handled.

- The error reads as Unexpected error.

- There is an id to track it. That is, for auditing and tracking by the payment gateway.

- This id do not have anything to do with the bank and merchant who is selling the ferry tickets.

- The message has a version that reads as 2.1.0

- This means, 3DS protocol used is of version 2.1.0

- This is communicated by 3DS server to the Directory Server and Bank Server, so that they use the same version in the contract.

- If the same version is not available at the bank server side, the lower version will be used.

- Error description reads, the TdsServerTransID is not received in the CRes.

- The 3DS component is raising this error.

- TdsServerTransID means ThreeDS Server Transaction Id

- 3DS means Three-Domain Secure, which is a security protocol used for online card transactions.

- This means, the response from bank is, it cannot fulfil the payment request.

- Because, the request from the 3DS layer do not have TdsServerTransID.

- Wait!

- Do I actually know if the CReq had the TdsServerTransID in the payload?

- This is critical to know.

- This is important to know.

- The AReq (Authentication Request) will have the TdsServerTransID in its payload.

- That means, this was present earlier. So, the CReq is fired from the 3DS server on receiving the ARes.

- The bank received TdsServerTransID and said it is giving a challenge before proceeding to authorization for payment.

- This response (ARes) from the bank will have TdsServerTransID.

- Along with this, the below are also present as a correlation ID to track this transaction.

- dsTransID

- The Directory Server ID to track this transaction

- acsTransID

- The bank server ID to track this transaction

- From here, the CReq payload requires the TdsServerTransID and acsTransID

- Where did the TdsServerTransID go missing now? How? Why?

- This needs to be test investigated. I have shared possible causes in the next section.

The TransactionID of the merchant who is selling the ferry ticket is different from the TdsServerTransID.

- The TdsServerTransID is needed for the bank and Payment Gateway to complete the transaction.

- Without this TdsServerTransId, the bank cannot proceed to authorize the payment.

All this is happening between the payment gateway [3DS server] and the Bank. The user and the merchant website is not in the picture here.

Why Did It Happen?

It happened because, the CReq from the 3DS Server of payment gateway did not have that TdsServerTransID. That is what the CRes from the bank says.

Note that, I presume, the bank systems and services are functioning and serving its customer at this point in time. This is an analyzed assumption I'm making here and I'm aware of this assumption I have made.

What made the

CReq to miss the TdsServerTransID?

- I read, the user made multiple attempts to initiate and make the payment.

- I have multiple perspectives and interpretations with hypotheses to say this could have lead to miss the TdsServerTransID.

- Talking about each hypotheses in detail is not the scope of this blog post.

- But, here are some of the possibilities that could have led to this situation.

- A glitch at payment gateway at that point in time.

- Someone is breaching in the middle and tweaking the request and response over the network.

- Device and hardware

- Geo location

- Network and traffic

- Timeouts

- Latency

- Storage running out

- Configuration gone wrong

- Caching and missing -- not persisting

- Intermediate and dependency services clogged

- A new release deployed and updated

- Downtime in the bank's services

- Intermittent bank's services

- Mismatching 3DS protocol versions that is not supported and accepted at either ends

- And, more!

In simple, it was well handled by the bank. I assume, the amount is not debited from the user's account. This is equally important and the LinkedIn post do not share about it.

I see the bank's service have worked well in this context and done what is expected out of it. The thumb rule is, when there is discrepancy in the data expected and received, do not authorize the payment request; abort it.

Can the service do much more if the TdsServerTransID is missed in the payload of AReq?

Me as a test engineer in the payment gateway engineering team, I will test for the below in minimal and as a must,

- AReq with no TdsServerTransID and observe how what happens!

- Do the 3DS server still fire a AReq to the bank with no TdsServerTransID?

- If yes, then that is a problem! It should be handled here.

- This problem can be prevented!

- I will ask my team why are we encouraging such request?

- This increases our customer support cost and operations time in responding to our clients for using our payment gateway.

- And, merchant can lose the business because of our payment gateway.

- What should 3DS server do when initiated AReq is missing the TdsServerTransID?

- What all other data in the transaction and session should be retrained and intact?

- Will these data change with a fresh TdsServerTransID created?

- I will explore and figure out what factors caused the 3DS to lose the user's authentication.

Who Created The Problem?

- From the error shared, I see,

- It is 3DS server who created this problem presuming the bank and its response is right.

Is This An API Problem?

- No, it is not a API problem.

- If we call it as a API problem, we have not understood what is an API.

Whose Problem It Is?

- It is the problem of the service that fired the CReq.

- Because, it fired CReq without the TdsServerTransID which is mandatory key-value in the payload.

- TdsServerTransID value cannot be null nor empty in JSON.

It appears to be a

business logic problem of the service at 3DS server side for firing

CReq with no TdsServerTransID.

How It Can Be Prevented?

- By making sure CReq will always have a distinct TdsServerTransID.

- If no distinct TdsSevrerTransID in a fresh CReq being fired, abort the request.

- Create the distinct TdsServerTransID and then construct the request payload before firing the CReq.

To make sure, payment gateway [3DS server] preserves the TdsServerTransID, dsTransID, and acsTransID of the session. If any one of this not matching at any of the components during the transaction, aborting the transaction is the best and right action to do.

Can you recall your daily life experience where you are said to not refresh or click on the browser's back button when you have initiated the payment transaction on web or mobile app? This is the reason! To preserve the data of the transactions in the session between these systems -- merchant web or app, payment gateway [3DS server], Directory Server and Bank Server.

This is an automation candidate. It has to be part of the daily test runs in the automation.

Who Should Be Fixing It?

- In my opinion for today upon analysis and assumption I have made, it should be fixed by the payment gateway.

- The payment gateway has to interpret the 3DS error code returned by the bank.

- And, then initiate the appropriate action as the payment transaction has failed.

- A new transaction between the 3DS and bank has to be started for that merchant's order.

- If it cannot happen, the payment gateway should abort all the current open session tied to that merchant's order.

- And, let know the user what is happening, and then direct the user.

- The payment gateway can read the ARes and CRes.

- That means, the payment gateway can read the HTTP Status Code of ARes and CRes.

- If there is an error code in ARes and CRes,

- Then, the gateway should assert for the 3DS error code along with the HTTP Status Code.

- For example,

- Say, the HTTP Status Code is 400 and 3DS Error Code 201 in CRes.

- Assert for these two and direct with appropriate contextual message and direction.

- In general, this is how custom developed error codes are handled by the client on receiving it from the services.

Note that, it is Error Code and not the Response Code. The two are different. And, the HTTP Status code is different from these two.

Why It Is Not An API Problem?

API is an interface which exposes the available services to the consumer.

It is the services which collects data and build the request payload, and expects the payload to process.

This request will pass through an interface which is opened to the consumer. This interface is called an API -- Application Programming Interface.

Analogy,

- The car has gear stick to switch the gear.

- This is an interface to the car's gear system.

- The driver will use the car's gear system through this gear stick.

- The gear box in the car is a service.

- The gear box adjusts and responds by switching to the gear per the driver's input.

- The different operations [business logic] provided by the gear box services are,

- Reverse

- Parking

- Neutral

- Switching the gear and assisting other components to speed up or speed down the car.

Can the interface have problem? Yes, it can have. In that case, the service will not be available to serve or to discover.

- Like, if the gear stick has problem, I cannot use the gears of the car, but, the gear box can be fully functional and in working condition.

- It is just the interface [gear stick] having the problem and as a result the consumer [driver] is unable to use the services [gear box].

In this case, the payment gateway could fire CReq to the bank. That is, the API exposing this service is functioning. It is the problem in a service. It is the service that has missed to ensure and mandate the presence of TdsServerTransID -- a business logic problem.

In simple, we Software Engineers [including me] use the term API vaguely and with no sense of what it means. This is my observation. Further, the Software Testers have tossed this term API in all possible ways and learning it in incorrect ways. I have no doubt in it when I say this.

Next time, when you say it is a API problem, rethink on what you are saying. Is it an API or the service(s) which is accessed through that API? It is useful when we describing the behavior of the system and its layer.

I cannot say it is a gear box problem for the gear stick not [usable] working, and vice versa. You see that?

To stop here,

- Testing skills and testing will help when it is collaborated with awareness and skills of the tech stack used in building the software system.

- Testing skills, programming and tech skills are not enough!

- The domain skills and awareness is essential and critical.

- If one is aware of the domain where the problem is observed, then the 201 will not be read as HTTP status code.

- Build the domain skills and maintaining the knowledge base as a GitHub project helps in a longer run.

- Interfaces and its gateways should not be overloaded with additional responsibilities to make sure the mandatory key-value is present.

- If did so, one should learn why. Because, it is an anti-pattern and not a suggested software engineering practice.

- It is the services that has problems most times.

- The interfaces and its gateway will have the discovery, orchestration and traffic problems along with the risks of security.

References:

- https://httpstatuses.io/

- https://developer.elavon.com/products/3dsecure2/v1/api-reference

- https://developer.ravelin.com/psp/api/endpoints/3d-secure/errors/

- https://developer.elavon.com/products/3dsecure2/v1/3ds-error-codes

- threeDSServerTransID (in our case the JSON reads as TdsServerTransID)

- 3DS Server Transaction ID

- Universally unique transaction identifier assigned by the 3DS server to identify a single transaction.

- The 3DS server auto-populates and appends this filed value in the authentication request (AReq) it sends to bank in addition to the data you send.

- This value can also be found by a lookup in the response received by this service -- /3ds2/lookup